What if the data center powering your AI assistant is quietly drawing as much electricity as a small city? As AI surges, hyperscale campuses, edge nodes, microgrids, advanced cooling, and novel energy sources all collide at the frontier of tech and sustainability. Dive into the untold story—see how “green” gets real, where regulation, community pushback, and emerging power models are reshaping the backbone of the digital age. Share to spark conversation.

Imagine this: a cluster of data centers, as big as a small city, buried behind thick walls and humming day and night—AI models, cloud workloads, streaming, real-time analytics—all being crunched inside. Now imagine that cluster is drawing 100–500 megawatts of continuous power, with cooling systems consuming millions of liters of water, injecting heat, stressing local grids, and stirring community outrage. But that’s not science fiction—it’s happening right now at the hyperscale frontier of digital infrastructure.

The hidden backbone of your daily life (cloud drives, recommendation engines, chatbots, real-time apps) is evolving rapidly—and the more we rely on AI, the more profound the challenges behind that hum become.

In this post, I want to take you on a journey inside the future of hyperscale and campus data centers, edge computing, cooling innovations, energy integration, social conflict, policy forces, cost models, hardware trends, and the job horizon. Let’s pull back the curtain together—and figure out how we build a digital world that doesn’t burn our planet.

1. Hyperscale & Campus Data Centers: The Beating Heart of the AI Era

Over the past decade, the architecture of internet infrastructure has shifted decisively toward hyperscale data centers—massive, sprawling campuses capable of supporting AI, cloud, high-throughput computing, and storage workloads at staggering scale. These are not your run-of-the-mill server farms; they are mega-facilities built to deliver resilience, performance, and power density that would have been unimaginable a generation ago.

-

These hyperscale sites often draw tens to hundreds of megawatts—some even aiming for 100+ MW to 500+ MW footprints.

-

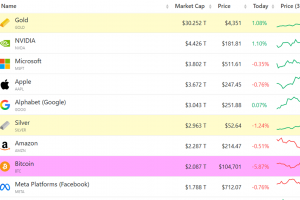

Goldman Sachs estimates that by 2030, data center power demand could rise ~165% above 2023 levels.

-

The IEA’s Energy & AI report forecasts global data center electricity use will more than double from ~415 TWh in 2024 to ~945 TWh by 2030.

-

Even with efficiency improvements, the raw scale of growth is eye-popping—data centers rising from ~1.5% of global electricity usage to nearly 3% by 2030.

Why hyperscale? Because AI pushes demand for high-density compute, low latencies, vast memory, and redundancy. These campuses allow for smooth scaling, redundant power and cooling, high-speed interconnects, and economies of scale. But they also magnify the challenges.

Some campuses also adopt the campus data center model—a cluster of smaller buildings across a contiguous site—balancing flexibility, risk isolation, and modular growth. These still require high-power capacity, close-by substations, and careful thermal/electrical planning.

2. AI Growth & Power Demand: The Surge Pushing Limits

AI is not just another workload—it’s a power-hungry force multiplier in the infrastructure equation.

AI’s energy footprint

-

Every inference, every model update, every fine-tuning run costs power. Large language models, vision models, recommendation engines—all of these compound the load.

-

Empirical measurements show that an 8-GPU NVIDIA H100 HGX node under load can draw ~8.4 kW in practice (slightly below nameplate) when training workloads. As AI workloads become more pervasive (from cloud to edge), the aggregate demand is enormous.

-

Goldman Sachs projects that AI’s share of total data center energy will climb to ~27% by 2027 (from ~14% today). Some models estimate that by 2030, AI-powered data centers in the U.S. alone might require an extra ~14 GW of capacity.

Doubling & tripling demand

-

The IEA scenario sees global electricity demand from data centers more than doubling by 2030.

-

BloombergNEF forecasts U.S. data center demand rising from ~35 GW today to ~78 GW by 2035.

-

Deloitte’s more aggressive model envisages AI power demand in the U.S. rising from ~4 GW today to 123 GW by 2035 (a 30x jump).

In short: AI isn’t a small delta. It rewrites the consumption baseline for the entire digital economy.

3. Sustainability: Renewables, Carbon Ambitions & Trade-offs

To avoid catastrophic climate consequences, data centers must evolve from energy guzzlers to responsible stewards. Here’s how sustainability is threading through the evolution.

Renewable energy adoption & firm power

-

Currently, many hyperscale operators source renewable energy credits (RECs) to “green” their consumption—but that’s only half the solution. Real-time carbon matching and firm zero-carbon generation matter.

-

Some tech firms are going further: Microsoft signed deals to support reopening a nuclear plant (Three Mile Island) to power data centers.

-

Google is exploring partnerships with small nuclear projects (e.g. Kairos) to secure dispatchable clean energy. The IEA expects renewables to expand by ~450 TWh to help meet data center demand growth—but wind and solar face intermittency challenges.

Efficiency, carbon-aware scheduling, dynamic cooling

-

Advanced scheduling systems can shift workloads across data center clusters depending on carbon intensity and weather. A recent framework (Green-DCC) uses multi-agent reinforcement learning to jointly optimize cooling and workload distribution to minimize carbon emissions.

-

On the cooling side, liquid cooling, direct-to-chip cooling, and hybrid approaches can reduce the PUE (power usage effectiveness) overhead of cooling.

-

The Green Grid consortium advocates cross-industry standards and metrics to improve data center resource efficiency.

Water footprint & environmental stress

-

Traditional evaporative or water-cooled chillers consume immense amounts of water. A 100 MW data center might use ~2 million liters of water per day. The global water footprint of data centers is estimated at ~560 billion liters annually, and this may double if expansion is unchecked. Many new centers are being sited in water-stressed zones (Texas, Arizona, India) to exploit cheap land and power—but this compounds strain on local ecosystems.

-

While data center emissions may increase, some argue AI could accelerate decarbonization in sectors like transportation, materials, climate modeling—thus offsetting carbon. The IEA notes the possibility that AI’s utility in energy innovation could partially balance its demands.

-

But that’s speculative. The baseline challenge remains: can data centers grow without doubling global emissions?

4. Advanced Cooling: From Air to Liquid, Immersion & Smart Systems

Cooling is the Achilles’ heel of high-density compute. As GPUs, TPUs, ASICs, and next-gen accelerators push power density upward, conventional air cooling or evaporative systems struggle.

Innovations in cooling

-

Liquid cooling / direct-to-chip: Coolant pipes deliver fluid directly to processor hot spots; reduction in thermal gradients means lower fan and chiller loads.

-

Immersion cooling: Entire server modules submerged in dielectric fluids. Because heat is removed more efficiently, overhead of fans and air HVAC is eliminated. Some hyperscale sites are experimenting with this.

-

Hybrid cooling: Blending air, liquid, and ambient cooling depending on conditions.

-

Adaptive control & AI cooling: Using predictive control and ML, cooling systems can adjust flow, pressure, pump speed dynamically based on workload and weather.

-

Waste heat reuse / heat recovery: Capturing heat rejection to warm nearby buildings, data center complexes, or district heating systems.

Why it matters

-

Cooling overhead can account for 30–50%+ of a data center’s energy overhead beyond IT load.

-

Better cooling directly reduces PUE (targeting values like 1.1–1.2) rather than 1.5–2.0 in older systems.

-

Cooling strategies also influence water use, material use, and site layout (e.g. needing room for chillers, condensers).

5. Edge Computing / Distributed Processing Near the Source

The age of monolithic centralized clouds is giving way to edge computing—small to medium facilities placed near users or data sources (factories, cell towers, municipalities, campuses).

Why edge matters

-

Reduced latency for real-time applications (autonomous driving, AR/VR, industrial IoT).

-

Lower network transit energy: By processing locally, less data traverses long-haul fiber, reducing backbone load and energy.

-

Resilience: Local nodes can survive network partitions or outages.

-

Flexibility in power sourcing: You can colocate edges near renewable generation, storage, or microgrids.

Sustainability + edge

-

Edge nodes often require compact cooling systems, possibly liquid or air-based, but the scale is smaller—allowing experimentation.

-

Past research (EASE) developed energy-aware scheduling for edge systems powered by local renewables + grid, balancing QoS and carbon footprint.

-

Edge nodes can be part of a distributed energy ecosystem—responding dynamically to grid signals, shifting compute loads, and contributing to local flexibility.

Yet deploying many edge sites increases complexity, hardware lifecycle management, and synchronization challenges. The orchestration of hundreds or thousands of edge nodes demands robust software, telemetry, and control.

6. Energy Management, Grid Integration & Microgrids

A data center no longer lives in isolation. As demand soars, integration with grids, microgrids, and demand flexibility becomes central.

Grid and demand participation

-

Hyperscale sites must negotiate with utilities, sometimes building behind-the-meter generation (solar, gas, fuel cells) and battery energy storage to moderate demand.

-

Some utilities now require new data centers to guarantee base usage or pay for grid upgrades. In Ohio, a rule requires data centers >25 MW to pay for 85% of anticipated energy use for 12 years.

-

Demand response: data centers can throttle non-critical workloads during grid stress peaks. But many operators prefer 24/7 utilization, limiting flexibility.

Microgrids and autonomy

-

On-site microgrids combining PV, wind, battery storage and backup generators offer partial energy independence.

-

Some operators are pursuing grid islanding, especially in constrained geographies where utility connections are expensive or unreliable.

-

In smart integration, data centers can act as virtual power plants—feeding excess capacity or stored energy back into grids at critical moments.

Challenges of integration

-

Grid interconnections in many regions are undersized for sudden 100+ MW influxes. Upgrading transmission lines, substations, transformers is costly and time-consuming.

-

Regulatory and tariff structures often do not reward flexibility or time-of-use energy.

-

Energy markets are volatile; operators must hedge fuel, power cost, and carbon price risks.

7. Social & Environmental Conflict: Communities Push Back

When multibillion-dollar data center campuses descend on rural or suburban regions, inevitable tensions arise.

Local opposition & concerns

-

Residents worry about noise, traffic, land use, water consumption, and aesthetic impacts.

-

In Ireland, data centers consume ~21% of national electricity; concerns about rising bills, grid strain, and environmental justice have led to moratoria.

-

Some municipalities ban or restrict evaporative cooling due to drought and water scarcity. A Reddit commenter noted:

“Traditional air-cooled methods top out; as GPUs push 132 000 W per rack, direct-to-chip cooling becomes inevitable—and some counties already ban evaporative cooling.”

-

Local utilities sometimes shift upgrade costs to ratepayers, sparking disputes.

Environmental justice & resource competition

-

In water-stressed zones, data centers compete with agricultural, municipal, and ecological demands.

-

Land use: large campuses often require hundreds of acres, altering habitats and drainage zones.

-

Carbon leakage: if data centers attract fossil-heavy energy, local emissions may rise disproportionately.

The role of activism & policy

-

Activist groups push for stricter environmental impact assessments, public hearings, tax accountability, and moratoria until grid capacity catches up.

-

Some regions have introduced data center moratoriums until sustainability plans are proven. Ireland’s Dublin region, for instance, paused new center approvals until 2028.

-

Developers now must collaborate transparently with communities, offer shared benefits (e.g. district heating, green jobs), and integrate environmental offsets.

8. Regulation, Policy & Tax Incentives

This is a battleground of laws, incentives, and mandates shaping the future of data infrastructure.

Incentives & tax breaks

-

Many jurisdictions offer tax abatements, property tax relief, or energy incentives to attract operators.

-

But escalating public pushback is leading some regions to retract or condition incentives based on sustainability performance.

Mandatory carbon / energy reporting

-

Regulators increasingly demand transparency in energy use, carbon intensity, demand profiles.

-

Some countries may require real-time emissions matching or green power procurement rules.

-

Incentives may shift from rebates to carbon credits, rewarding operators that bid into grid flexibility or carbon-aware scheduling.

Grid capacity & interconnection rules

-

Utilities are imposing stricter interconnection standards, fees, and cost sharing.

-

Some jurisdictions require data centers to fund a portion of grid upgrades.

-

Zoning and permitting may reclassify large data centers as critical infrastructure, which accelerates or constrains approval processes depending on political climates.

Carbon / emissions taxation & cap-and-trade

-

As carbon pricing spreads, data centers will internalize costs of emissions.

-

In carbon-constrained markets, operators may need offsets, carbon capture, or zero-carbon sourcing.

-

Policies that favor dispatchable zero-carbon power (nuclear, geothermal) may tilt the playing field away from intermittent renewables alone.

Future regulatory trends

-

Mandated demand flexibility participation: data centers could be required to modulate load during grid stress.

-

Minimum PUE / energy efficiency standards for new builds.

-

Water use limits / reporting, especially in drought-prone states.

-

Local benefit requirements: to get permits incentives, data centers may be required to invest in local community infrastructure, energy capacity, or jobs.

9. Cost Models & Colocation / Leasing Markets

Operating a hyperscale campus is extraordinarily capital intensive, leading many players to adopt hybrid models combining owned infrastructure and colocation / wholesale leasing.

CapEx & OpEx dynamics

-

Huge upfront costs: land, substations, fueling backups, cooling systems, interconnect, fiber, structural systems.

-

Ongoing costs: electricity, cooling, maintenance, staffing, upgrade cycles, water, backup fuel.

-

Efficiency gains help—but scale is king: the largest operators get access to preferential energy rates, joint procurement, and economies.

Colocation / wholesale leasing

-

Many businesses, smaller cloud providers, AI startups, or enterprises lease racks, pods, or cages rather than build their own facility.

-

The colocation model allows operators to spread cost across tenants, smoothing risk.

-

Some sites serve as multi-tenant campuses — hyperscale operators often lease excess capacity to others.

-

Pricing models often include power + space + cooling + interconnect fees. The high-power densities of modern racks push operators to price based on energy footprint rather than simply sq ft.

Risks & margin pressures

-

Energy cost volatility is a major risk. If electricity rates spike (or carbon costs rise), margins shrink.

-

As cooling and power infrastructure becomes more specialized, renovation or retrofitting is expensive.

-

Overprovisioning leads to stranded cost; underprovisioning leads to thermal limits or throttling.

-

Market saturation in certain regions (e.g. Northern Virginia, Dallas, Singapore) could result in depressed lease margins.

10. Hardware, High-Power Racks & Specialized Equipment

The evolution of compute hardware is reshaping data center design from inside out.

High-density racks & power delivery

-

Traditional data center racks used ~5–15 kW per rack. Modern AI racks can exceed 50–100 kW or more per rack.

-

Some designs project 100+ kW/rack hotspots, especially for stacked GPU modules.

-

Delivering that much power and cooling to a single rack demands busbars, high-amperage delivery lines, liquid cooling risers, and careful thermal zoning.

Specialized equipment & accelerators

-

AI workloads often use GPU clusters, TPUs, AI ASICs, optical interconnects, and high-speed memory fabrics.

-

Hardware is pushing toward chiplet architectures, heterogeneous integration, and reusability / modularity to reduce embodied carbon. For example, the REFRESH FPGA concept proposes reusing retired FPGA dies in new architectures to amortize embodied cost.

-

Boards and system design now often incorporate on-board cooling (e.g. cold plates) or integrated liquid loops.

Redundancy, modularity & upgrade cycles

-

Hyperscale centers are designed for modular expansion: adding new pods or modules without halting existing operations.

-

Hardware refresh cycles (every 3–5 years) produce e-waste; sustainable management of retired gear is critical.

-

Energy-efficiency per component is paramount: any marginal savings at the chip or board level can cascade across thousands of units.

11. Future Power Sources & Architectural Paradigms

Looking ahead, new energy, architecture, and system paradigms may redefine the future of data infrastructure.

Small Modular Reactors (SMRs) & nuclear microreactors

-

SMRs offer dispatchable, low-carbon baseload power at smaller scale—making them attractive for remote or large campus sites.

-

Some tech companies already explore direct power purchase agreements tied to nuclear projects.

-

Regulatory, licensing, and safety constraints remain major hurdles.

DC power & high-voltage internal architectures

-

Some data centers are experimenting with 48 VDC, 380 VDC, or high-voltage DC power distribution to reduce losses (vs. AC–DC conversion).

-

Longer-term, direct-chip power delivery may minimize inefficiencies in the AC chain.

-

The shift to DC also simplifies integration with battery or renewable DC systems.

Novel generation & energy carriers

-

Hydrogen fuel cells, solid oxide cells, geothermal integration, waste-heat-to-power, or fuel-based microturbines may become part of hybrid energy mixes.

-

Coupling data centers with renewable plants (i.e. co-location with solar, wind, or pumped hydro) is an emerging trend.

-

Energy storage evolution (batteries, flow batteries, advanced materials) will help buffer intermittency and demand peaks.

AI-native energy orchestration

-

The future may see autonomous energy orchestration: AI engines that balance compute, cooling, battery, grid import, and renewables in real time, optimizing costs and carbon.

-

Further, the boundary between infrastructure and workload will blur: data centers may dynamically offload or migrate tasks globally based on energy prices, carbon intensity, or weather.

12. Jobs, Careers & the Human Frontline

Behind these colossal machines are people—and the evolving infrastructure demands new kinds of talent.

Emerging roles & skillsets

-

Energy systems engineers familiar with microgrids, batteries, renewables, and power markets.

-

Data center cooling architects, especially experts in liquid cooling, immersion, thermal dynamics.

-

Energy software engineers / control systems / ML ops for energy: designing carbon-aware scheduling systems.

-

Sustainability analysts / ESG coordinators to manage carbon, water, regulatory reporting.

-

Edge/network orchestration engineers to manage large fleets of edge nodes.

-

Hardware/tech recycling & lifecycle managers to handle e-waste, refurbishing, and sustainable procurement.

Upskilling & transition

-

Traditional electrical and mechanical engineering programs may need to integrate grid theory, energy markets, power electronics, and data science.

-

The intersection of IT operations + energy operations becomes a new hybrid domain.

-

Regions that host data center campuses often get a talent boost—local universities may partner for internships, labs, and training programs.

-

Ethical, policy, and communication roles are critical—operators will need to liaise with regulators, communities, and environmental groups.

Here’s what I want you to take away:

-

We are entering a power revolution behind the digital revolution. If AI is the brain, data centers are the beating heart—and that heart commands energy, water, cooling, and infrastructure at a scale we’ve never seen before.

-

The tension between growth and sustainability is real—but not insurmountable. Innovations in cooling, grid integration, advanced energy sources, edge distribution, and carbon-aware systems can tilt the balance toward a future where we compute heavily and tread lightly.

-

Pressure from communities, regulators, and policy will shape the shape of data infrastructure. The future isn’t just about tech—it’s about justice, fairness, and shared benefit.

-

New careers will emerge at the intersection of computing and energy. If you’re passionate about climate + tech, this frontier is fertile ground.

So here’s what YOU can do:

-

Engage & share your voice—comment, ask questions, debate. This infrastructure affects all of us.

-

Raise awareness—share this post if you believe the digital future must also be a sustainable one.

-

Watch local proposals—when data center projects come near your area, understand the trade-offs, demand accountability.

-

Prepare for the new jobs—if you’re in engineering or tech, expand your energy, controls, sustainability literacy.

Let’s make sure the engines of our future digital world do not burn the foundations beneath them.